Introducing Indic LLM leaderboard

Introduction

In the ever-evolving landscape of artificial intelligence (AI) and natural language processing (NLP), the focus on Indic languages, spoken by over a billion people in South Asia, is gaining momentum. Despite their vast user base and cultural significance, Indic languages face numerous challenges, ranging from data scarcity to the lack of sophisticated language tools. In this blog post, we'll delve into Indic Eval, a nimble evaluation suite crafted for appraising Indic LLMs across various tasks. Furthermore, we'll look into a leaderboard equipped with specialized benchmarks to assess and compare the performance of Indic Eval systems. This platform offers a holistic approach to evaluating model efficacy in the Indic language modeling sphere.

Why an Indic LLM Leaderboard is Required ?

Recent advancements in Indic Large Language Models (LLMs) underscore progress, yet the absence of a unified evaluation framework complicates tracking and comparison. This challenge exacerbates existing issues like data scarcity and inadequate language tools. A unified evaluation framework with benchmarks is crucial to overcome these challenges and drive meaningful advancement in the field.

About CognitiveLab:

Founded by Adithya S K, CognitiveLab specializes in providing AI solutions at scale and undertaking research-based tasks. This initiative aims to create a unified platform where Indic LLMs can be compared using specially crafted datasets. Originally developed for internal use, CognitiveLab is now open-sourcing this framework to further aid the Indic LLM ecosystem.

After the release of Amabri, a 7b parameter English-Kannada bilingual LLM, CognitiveLab sought to compare it with other open-source LLMs to identify areas for improvement. Thus, the Indic LLM suite was born, consisting of two projects:

Solution

- Indic-Eval: A lightweight evaluation suite tailored specifically for assessing Indic LLMs across a diverse range of tasks, aiding in performance assessment and comparison within the Indian language context.

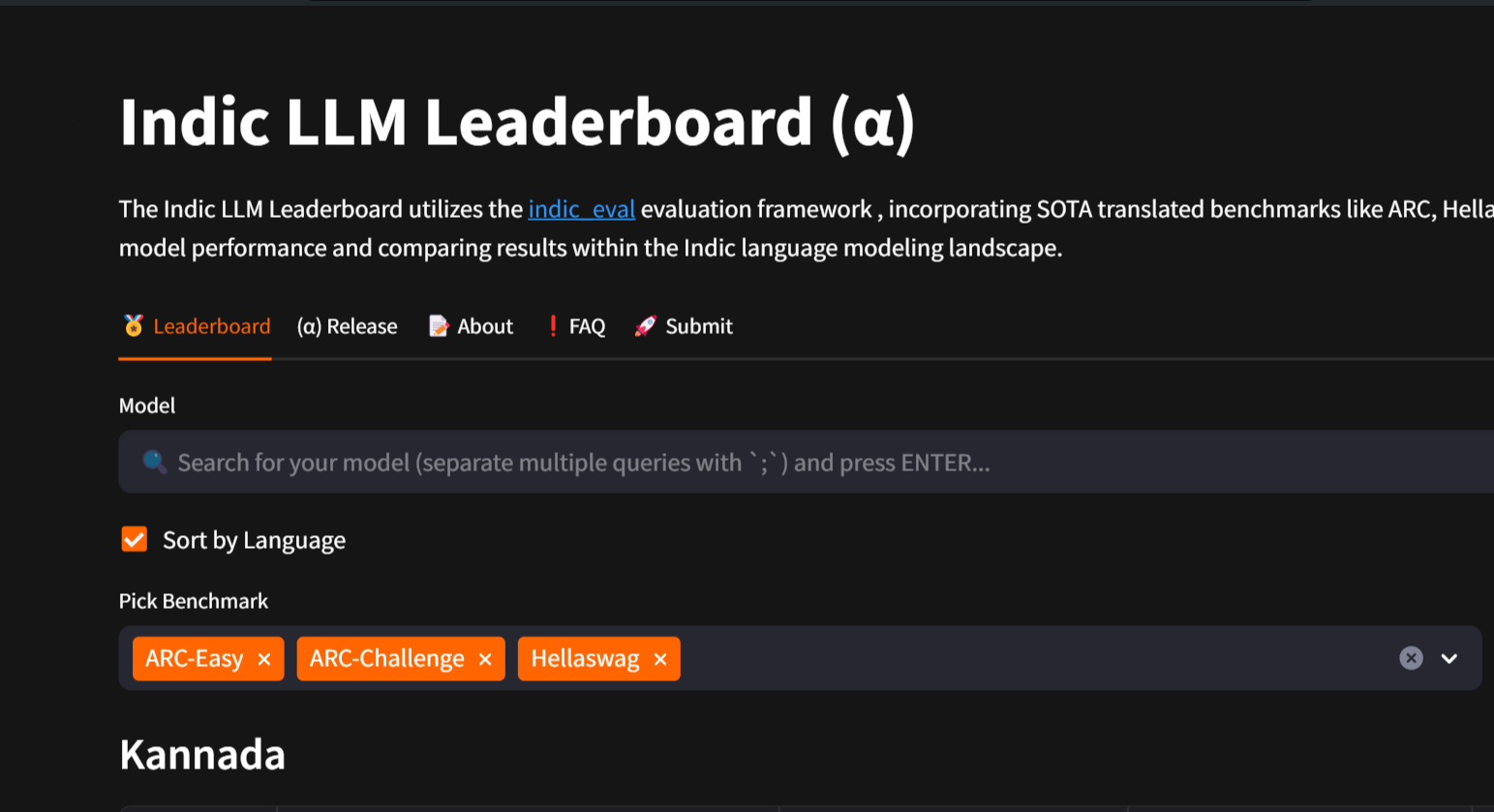

- Indic LLM Leaderboard: Utilizes the indic_eval evaluation framework, incorporating state-of-the-art translated benchmarks like ARC, Hellaswag, MMLU, among others. Supporting seven Indic languages, it offers a comprehensive platform for assessing model performance and comparing results within the Indic language modeling landscape.

What comes with the alpha release

The alpha release of the Indic LLM Leaderboard and Indic Eval. These tools are pivotal steps towards standardizing evaluations within the field.

The Indic LLM Leaderboard is an evolving platform, aiming to streamline evaluations for Language Model (LLM) models tailored to Indic languages. While this alpha release is far from perfect, it signifies a crucial initial step towards establishing evaluation standards within the community.

Features:

As of this release, the following base models have been added into the leaderboard to use are reference:

meta meta-llama/Llama-2-7b-hfgoogle/gemma-7b

Tasks incorporated into the platform:

ARC-Easy:{language}ARC-Challenge:{language}Hellaswag:{language}

For evaluation purposes, each task includes 5-shot prompting. Further experimentation will determine the most optimal balance between evaluation time and accuracy.

we are currently testing a lot more benchmarking datasets and planning to integrate with indic_eval

Datasets:

Datasets utilized for evaluation are accessible via the following link: Indic LLM Leaderboard Eval Suite

Rationale for Alpha Release:

The decision to label this release as alpha stems from the realization that extensive testing and experimentation are necessary. Key considerations include:

- Selection of appropriate metrics for evaluation

- Determination of the optimal few-shot learning parameters

- Establishment of the ideal number of evaluation samples within the dataset

Roadmap for Next Release:

Anticipate the following enhancements in the upcoming release:

- Enhanced testing and accountability mechanisms

- A refined version of the leaderboard

- Defined benchmarks and standardized datasets

- Bilingual evaluation support

- Expansion of supported models

- Implementation of more secure interaction mechanisms

- Addition of support for additional languages

How to show up on the Leaderboard

Here are the steps you will have to follows to put your model on the Indic LLM leaderboard

Clone the repo:

git clone <https://github.com/adithya-s-k/indic_eval>

cd indic_eval

Create a virtual environment using virtualenv or conda depending on your preferences. We require Python 3.10 or above:

conda create -n indic-eval-venv python=3.10 && conda activate indic-eval-venv`Install the dependencies. For the default installation, you just need:`

pip install .

If you want to evaluate models with frameworks like accelerate or peft, you will need to specify the optional dependencies group that fits your use case (accelerate, tgi, optimum, quantization, adapters, nanotron):

pip install .[optional1,optional2]

The setup tested most is:

pip install .[accelerate,quantization,adapters]

If you want to push your results to the Hugging Face Hub, don't forget to add your access token to the environment variable HUGGING_FACE_HUB_TOKEN. You can do this by running:

huggingface-cli login

Command to Run Indic Eval and Push to Indic LLM Leaderboard

accelerate launch run_indic_evals_accelerate.py \

--model_args="pretrained=<path to model on the hub>" \

--language kannada \

--tasks indic_llm_leaderboard \

--output_dir output_dir \

--push_to_leaderboard <yourname@company.com> \

It's as simple as that.👍

For --push_to_leaderboard, provide an email id through which we can contact you in case of verification. This email won't be shared anywhere. It's only required for future verification of the model's scores and for authenticity.

After you have installed all the required packages, run the following command:

For multi-GPU configuration, please refer to the docs of Indic_Eval.

Some common questions

What is the minimum requirement for GPUs to run the evaluation?

- The evaluation can easily run on a single A100 GPU, but the framework also supports multi-GPU based evaluation to speed up the process.

What languages are supported by the evaluation framework?

- The following languages are supported by default:

english,kannada,hindi,tamil,telugu,gujarati,marathi,malayalam

How can I put my model on the leaderboard?

- Please follow the steps shown in the Submit tab or refer to the indic_eval for more details.

How does the leaderboard work?

- After running indic_eval on the model of your choice, the results are pushed to a server and stored in a database. The Frontend Leaderboard accesses the server and retrieves the latest models in the database along with their respective benchmarks and metadata. The entire system is deployed in India and is as secure as possible.

How is it different from the Open LLM leaderboard?

- This project was mainly inspired by the Open LLM leaderboard. However, due to limited computation resources, we standardized the evaluation library with standard benchmarks. You can run the evaluation on your GPUs and the leaderboard will serve as a unified platform to compare models. We used indictrans2 and other translation APIs to translate the benchmarking dataset into seven Indian languages to ensure reliability and consistency in the output.

Why does it take so much time to load the results?

- We are running the server on a serverless instance which has a cold start problem, so it might sometimes take a while.

What benchmarks are offered?

- The current Indic Benchmarks offered by the indic_eval library can be found in this collection: https://huggingface.co/collections/Cognitive-Lab/indic-llm-leaderboard-eval-suite-660ac4818695a785edee4e6f. They include ARC Easy, ARC Challenge, Hellaswag, Boolq, and MMLU.

How much time does it take to run the evaluation using indic_eval?

- Depending on which GPU you are running, the time for evaluation varies.

- From our testing, it takes 5 to 10 hours to run the whole evaluation on a single GPU depends on the GPU

- It's much faster when using multiple GPUs.

How does the verification step happen?

While running the evaluation, you are given an option to push results to the leaderboard with

-push_to_leaderboard <yourname@company.com>. You will need to provide an email address through which we can contact you. If we find any anomaly in the evaluation score, we will contact you through this email for verification of results.

Use Cases:

- Research and Development: Researchers and developers can utilize the Indic LLM Framework to adapt pre-trained LLMs to specific domains and languages, facilitating the development of customized language models tailored to diverse applications such as sentiment analysis, machine translation, and text generation.

- Model Evaluation and Comparison: The Indic Eval Suite enables researchers to evaluate the performance of Indic LLMs across various tasks and benchmarks, allowing for comprehensive performance assessment and comparison. This aids in identifying strengths and weaknesses of different models and guiding future research directions.

- Benchmarking and Progress Tracking: The Indic LLM Leaderboard provides a centralized platform for benchmarking the performance of Indic LLMs against standardized benchmarks and tracking progress over time. This fosters transparency, collaboration, and healthy competition within the research community, driving continuous improvement in Indic language modeling

Call for Collaborative Effort:

To foster collaboration and discussion surrounding evaluations, a WhatsApp group is being established and we can also connect on Hugging faces discord indic_llm channel

Contribute

All the projects are completely open source with different licenses, so anyone can contribute.

The current leaderboard is in alpha release, and many more changes are forthcoming:

- More robust benchmarks tailored for Indic languages.

- Easier integration with indic_eval.

Conclusion

The alpha release of the Indic LLM Leaderboard and Indic Eval marks an important first step towards establishing standardized evaluation frameworks for Indic language models. However, it is clear that significant work remains to make these tools truly robust and accountable.

The decision to label this as an alpha release underscores the need for extensive testing, refinement of evaluation metrics, and determination of optimal setup parameters. The path ahead requires close collaboration and active participation from the open-source community.

We call upon researchers, developers, and language enthusiasts to join us in this endeavor. By pooling our collective expertise and resources, we can build a comprehensive, transparent, and reliable system to assess the performance of Indic LLMs. This will not only drive progress in the field but also ensure that advancements truly benefit the diverse linguistic landscape of the Indian subcontinent.

The road ahead is long, but the potential impact is immense. With your support and involvement, we are confident that the Indic LLM Leaderboard and Indic Eval will evolve into reliable and indispensable tools for the Indic language modeling community. Together, let us embark on this journey to unlock the full potential of Indic language technologies.