CognitiveLab Wins Meta's Prestigious Llama Impact Grant!

This non-dilutive funding recognizes Nayana's potential and accelerates our mission to build inclusive, multilingual AI serving 22 global languages including 10 Indic languages.

Key Areas

Our Core Focus in AI Innovation

Data Curation & Processing

Developing novel techniques for large-scale data collection, cleaning, and pre-processing to build high-quality datasets for robust AI models.

Structured Data Extraction

Researching advanced methods to extract structured information from unstructured data sources like documents, images, and audio.

Multimodal Understanding

Exploring architectures that effectively fuse information from diverse modalities (text, vision, audio) for deeper contextual understanding.

Research

Ongoing Research Initiatives

Nayana

Multi-modal, Multi-lingual, Multi-task LLM designed to comprehend and generate content across various languages and modalities.

Indic LLM Leaderboard

Open infrastructure to evaluate and compare language models for Indic languages, promoting transparency and advancement in Indic NLP research.

Ambari

Bilingual Kannada-English large language model designed to bridge language barriers and enable AI applications for Kannada speakers.

Products

Expore Our Popular open source repositories and products

OmniParse

Convert Anything into Structured Actionable Data

Multi-format input support

AI-powered data extraction

Customizable output structures

Real-time processing

RAG SaaS

Deploy Agentic RAG Solutions at Scale to your Enterprise

Agentic AI is generating

Agentic AI is generatingAI Engineering Academy

Master Applied AI, One Step at a Time

Prompt Engineering

RAG

AI Agents

Deep Learning

just now

just now

Research &

Explore our contributions to the field of AI research.

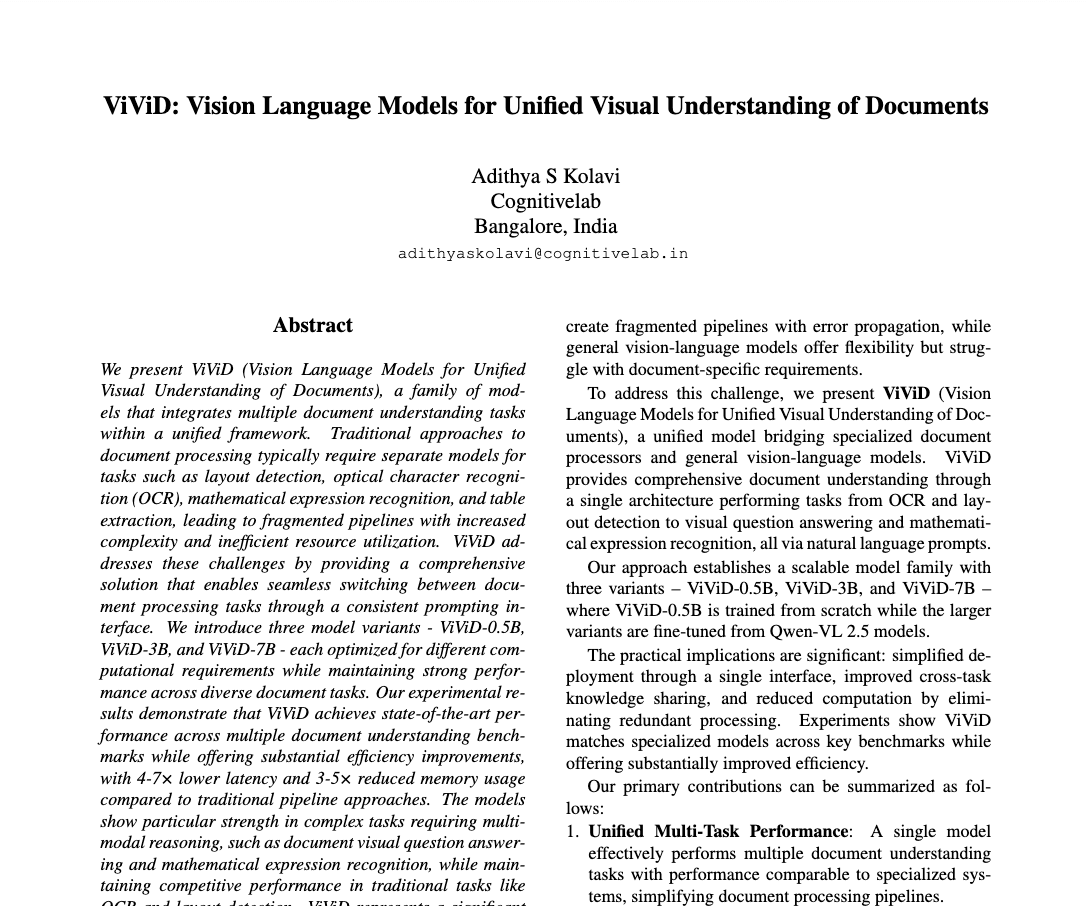

ViViD - Vision Language model for Unified Visual Understanding of Documents

Adithya S Kolavi

CVPR 2025 | Emergent Visual Abilities and Limits of Foundation Models (EVAL-FoMo 2025)

A vision-language model specifically optimized for document understanding tasks, capable of processing diverse document formats with high accuracy.

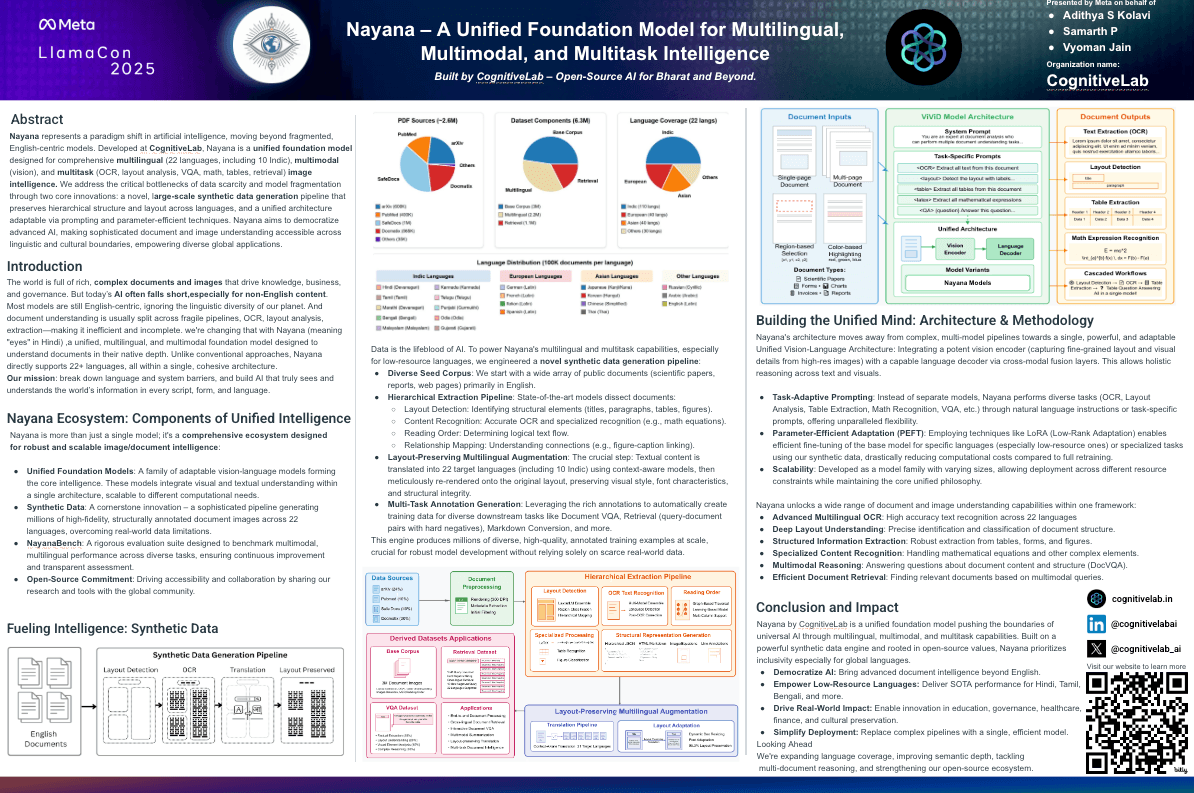

Nayana - A Unified Foundation Model for Multilingual, Multimodal, and Multitask Intelligence

Adithya S Kolavi, Samarth P, Vyoman Jain

LlamaCon 2025 | LLama Impact Grant 2024 winner

Winner of the 2024 Llama impact grant from Meta, this paper presents a foundation model architecture designed for multilingual and multimodal applications.

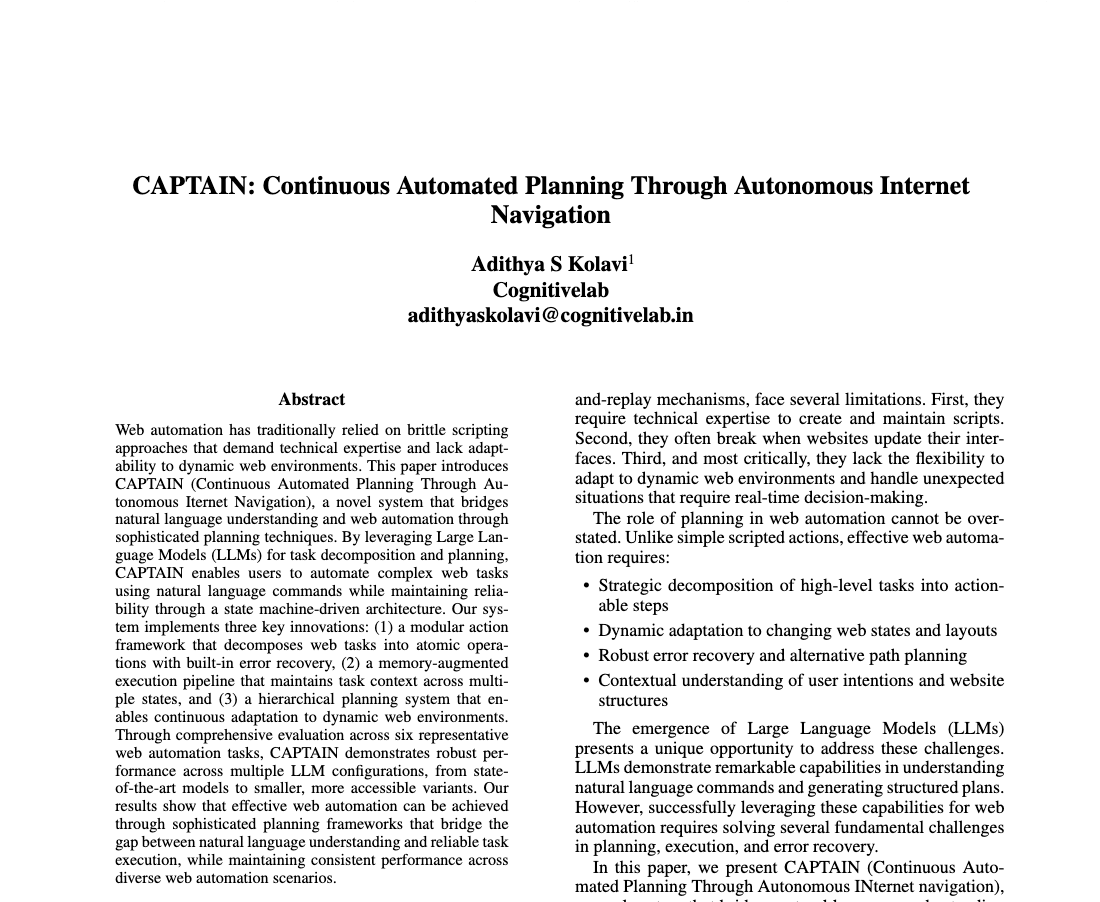

CAPTAIN: Continuous Automated Planning Through Autonomous Internet Navigation

Adithya S Kolavi

AAAI 2025 | Large Language Models for Planning (LM4Plan)

A novel framework for autonomous web navigation and task planning using large language models to perform complex multi-step operations.

Blogs &

Stay updated with our latest blogs and announcements showcasing our innovative projects and research advancements.

Introducing NetraEmbed - SoTA Multimodal Multilingual Document Retrieval

We're excited to announce NetraEmbed and ColNetraEmbed, achieving 152% improvement over existing systems in multilingual document retrieval. Supporting 22 languages with state-of-the-art performance.

Read More →

CognitiveLab Wins Meta's Llama Impact Grant 2024 for Project Nayana

CognitiveLab is proud to be selected as a recipient of Meta's prestigious Llama Impact Grant 2024, accelerating our revolutionary Nayana project—a multilingual (22 languages, 10 Indic), multimodal AI ecosystem that democratizes AI across global languages.

Read More →

Introducing AI Engineering Academy: Creating the Next Generation of AI Engineers

AI Engineering Academy offers comprehensive training programs and resources to help aspiring engineers master the technical skills and practical knowledge needed to build, deploy, and maintain AI systems at scale.

Read More →

Introducing Omniparse: Universal Data Parsing

Omniparse is an advanced document parsing platform that uses AI to extract structured data from any document format, enabling businesses to automate document processing workflows with unprecedented accuracy and efficiency.

Read More →

Startup Partners

Llama impact grant 2024

If you have any questions, suggestions, or would like to discuss potential collaborations, please don't hesitate to reach out. I'd love to hear from you!Questions, ideas, or collaborations? Reach out—I'm all ears!

Connect With Us

Opportunities

Join our team or support our research

Join our Team

Application Process

Please fill out the form below to show interest in our open positions. We will review your application and get back to you within 2-3 weeks.

Support Our Research

If you like our research and would like to sponsor our projects and open source initiatives, please get in touch. Your sponsorship will greatly help us continue developing innovative solutions and advancing the field of AI.

- ✓Support cutting-edge AI research

- ✓Contribute to open source development

- ✓Help make AI accessible to everyone